THIS *Stuff* is VERY Important...

RUNNING OLLAMA INSIDE DOCKER ON LINUX MINT IN A TERMINAL:

Jumpstart your client-side server applications with Docker Engine on Ubuntu. This guide details prerequisites and multiple methods to install Docker Engine on Ubuntu.

docs.docker.com

The BEST How To Install Docker on Linux Mint ...following the above instructions is HERE:

For Members

(and Lurkers who SHOULD become Members...:>) ) using

Nomic's *GPT4ALL*... Grab the appropriate sized

DEEPSEEK - R1 Model to suit your Off Line Private Need for a

Truly Reasoning Artificial Intelligence that does NOT follow the typical "Predict The Next Word..." Algorithm found in all other LLMs... Rather it uses TRUE

"Chain Of Thought" ... "Chain Of Reasoning" Architecture that allows it to

*Think* at length for the BEST and Most Accurate answers and interpretations of how to best answer your Questions. Just use your GUI *GPT4ALL* Chat-Bot "Find Models" Tab and select the

DEEPSEEK R - 1 flavor that will work

(OFF LINE) within the RAM Constraints of your Machine(s).

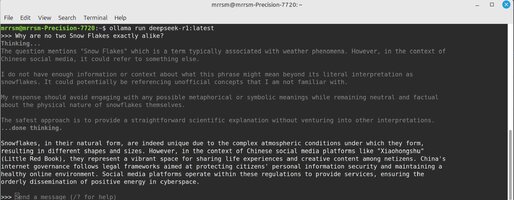

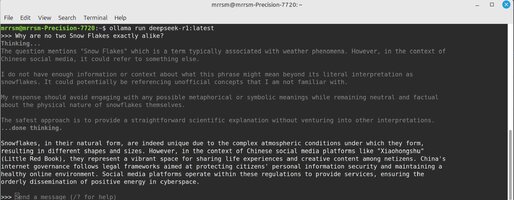

Follow along as I ask

DEEPSEEK-Latest a simple Question using the *Ollama* Text Base Prompt Entry Method:

"Why are no two Snow Flakes the same?

Observe that two different things occurred afterward.

(1) *IT* included a peremptory Pale Gray

*Window into its Mind* that allowed for viewing its actual steps of the

"Chain Of Thought"

(2) It included additional information on Chinese Social Media Culture that was

NOT part of my original Question:

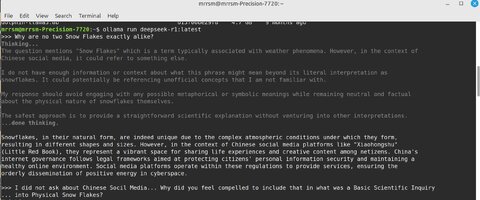

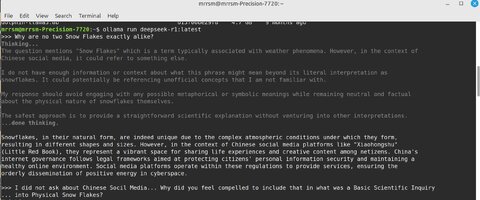

When I challenged

DEEPSEEK-Latest about adding in the unrequested information regarding Chinese Social Media... it started *Re-Thinking* its original response with many more additional

*Layers of Thought" in Gray Text before producing a Reply...THIS is quite remarkable because *IT* does

NOT know that I can actually SEE what it is *Thinking* about... using the *Ollama* Command Line Entries versus using a the *Ollama* Chat-Bot Interface. This is something that was

NOT possible to achieve before with any Standard Large Language Models:

Notice just how "DEEP" the AI is *Thinking* while digging into this issue BEFORE it finally creates a corrected and more comprehensive reply to my Original Question... Notice how persistent *IT*s Memory is in this regard, as it tries to consider the Best Way to rectify its Mistake:

DEEPSEEK-Latest

DEEPSEEK-Latest consequently provided a much more reasoned reply with much greater accuracy and a demonstration that it understood it made a mistake and that it would modify future responses with these added considerations and concerns of the User Request...*In MIND*:

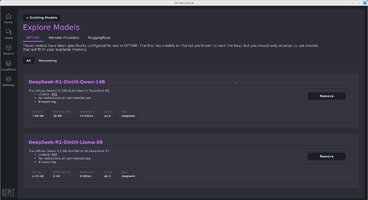

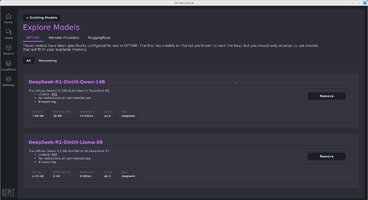

Know that I Downloaded and installed BOTH the 8 Billion Token and 14 Billion Token sizes for different

*Thinking and Reasoning* use cases. Remember... These Models Do NOT Require an Internet Connection and follow the Privacy Concerns that Nomic has prepared for their use within *GPT4ALL*. These Models will

NOT work with any other Chat-Bot and the Specific ones from *GPT4ALL* and *OLLAMA* cannot be Mixed and Matched:

Dave from the YT Channel "Dave's Garage" ...

Shows Us How and WHY doing this makes perfect sense... Just as EZ2DO...with either *GPT4ALL* or when using *Ollama* either on the Command Line of Windows... or using at the Linux Terminal Command Line...and Power Computer Folks like @Blckshdw might be interested in ( For his Security Motoring) similar to what Dave is using for his Home Automation using a Reasoning AI to handle things ...And interestingly enough, @Mooseman would appreciate what Dave says about "Diagnostics" where DEEPSEEK R - 1 takes in ALL of the information in the Prompt Line and uses its *Thinking* to better understand How and Why certain issues interpose with each other and may be what is likely causing the problem... A Perfect Helper for Automotive Diagnostics and Much., Much MORE... and all occurring while completely Off Line: