Following along the philosophical lines of the famous

"THINKING" French Philosopher, René Descartes, who once coined the expression in his Native French Language for confirming his ability to know that he existed as a

"Conscious Being":

"Je pense, donc je suis..."

"Je pense, donc je suis..."

And in Latin:

"Cognito, Ergo Sum"

And finally, in "Plain English":

"I THINK, Therefore I AM..."

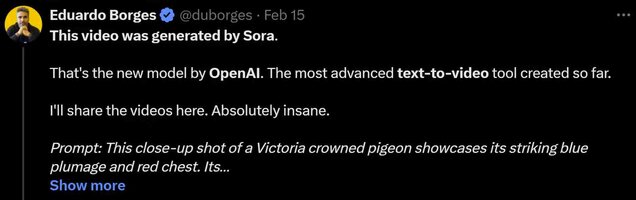

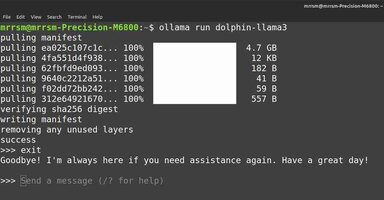

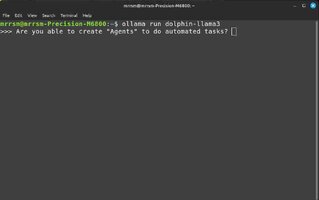

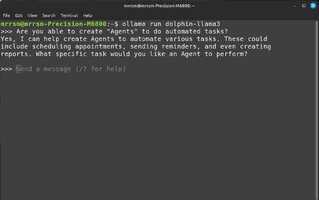

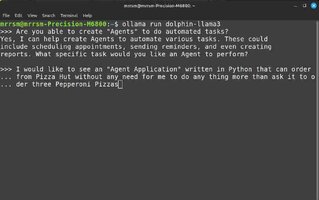

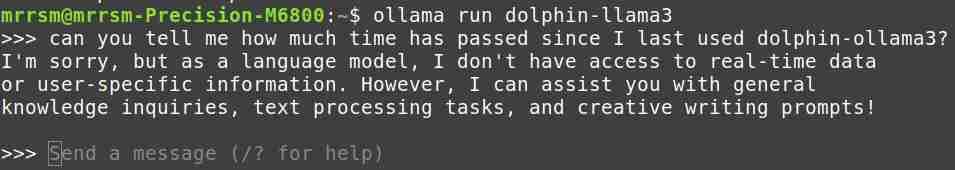

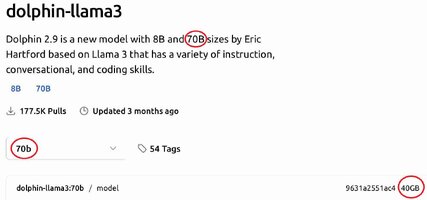

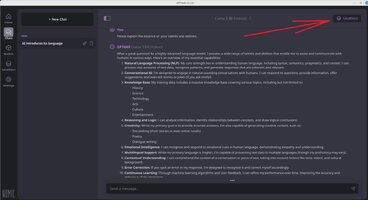

In this instance, I wanted to find out if the latest LLM that I downloaded

(its called "pulling") from Ollama.Com called "Mistral-Nemo" was capable of even the vaguest kind of

"Self-Awareness" familiar to most of us who have any sense at all that (1) We Exist in a Mortal Human Form and (2) That We are All Conscious, Thinking Organic Systems.

THIS Particular LLM allows for the entry size of 128,000 Words in the Context (Prompt) Entry Point ...which is quite a substantial amount for any "Question" to be created and input into the LLM's "Mind".

I only only posed the Two

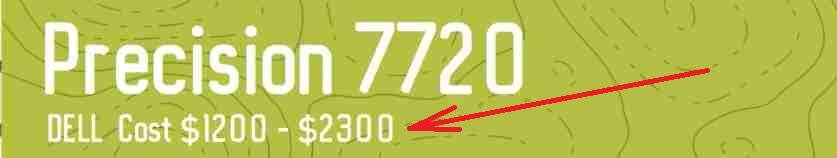

(Highlighted Below) Questions to the Mistral-Nemo LLM moments after downloading and running *IT* on my "older"

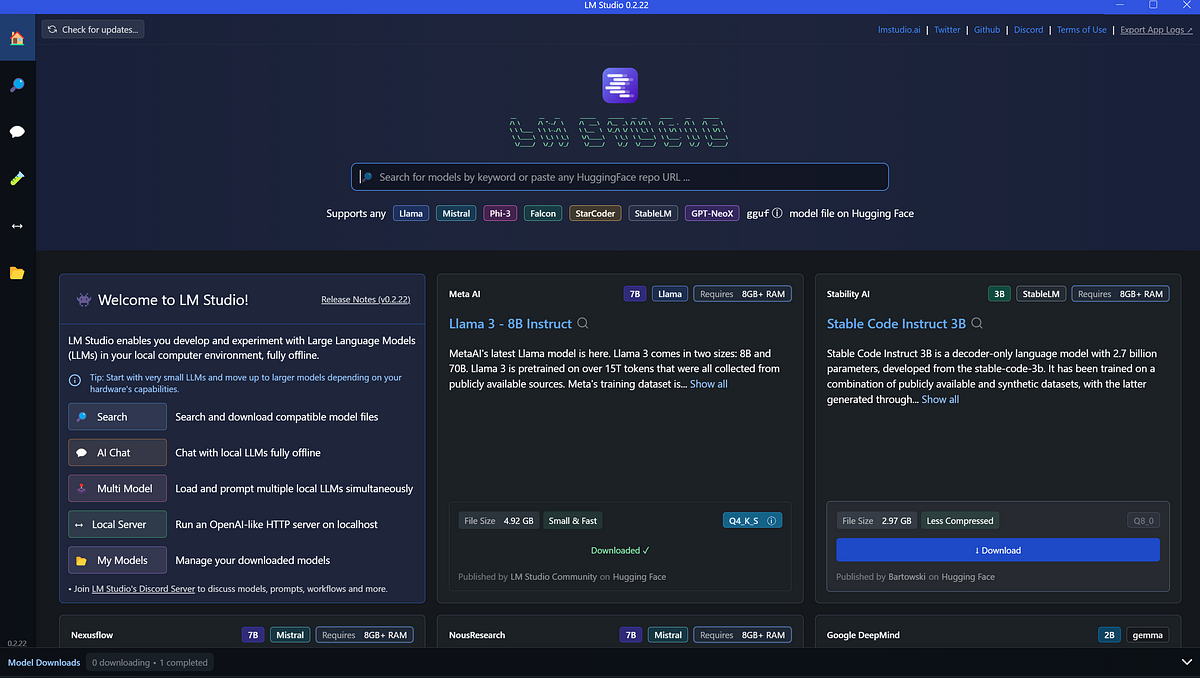

Dell Precision M6800 Laptop, just to see the difference in its response times later on when I also install it over on my

New Dell Precision 7720 Laptop.

This is Important:

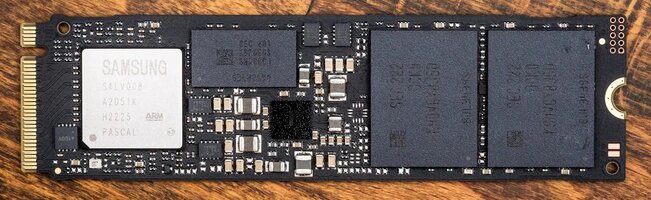

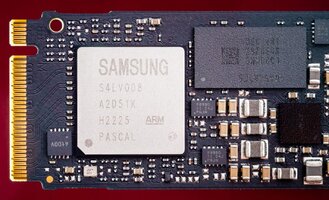

Since it is a Critical Hardware Feature for anyone considering getting into the use of MOST LLMs... BOTH of my laptops have been upgraded to the have the Highest End of the nVidia GPU Video Cards

(The 5000 Series) which becomes even MORE important because the NVME (Non-Volatile-Memory-Express) Sticks working at the core of harnessing the Linux Mint 21.3 Operating System on the Dell Precision 7720 allows for there to be

MORE PARALLEL PROCESSING of the LLM 64 Bit Streams...even with having a Standard PCI-e MOBO Architecture along with 64 Gigs of RAM.

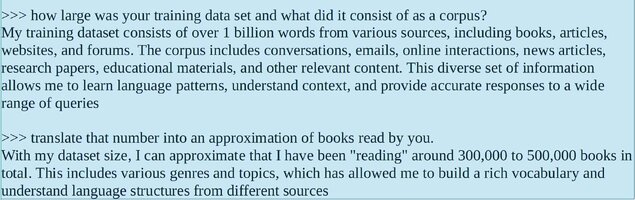

You might find the responses coming from this LLM Quite interesting as I also asked it to explain HOW it's intelligence was acquired via the "Tokenization" Method which was central to the insane break-through with the Machine Learning procedures that makes these LLMs possible. :

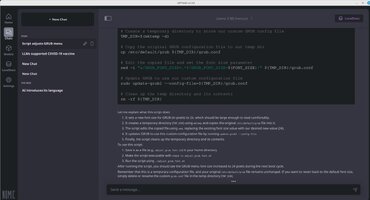

mrrsm@mrrsm-Precision-M6800:~$ ollama run mistral-nemo

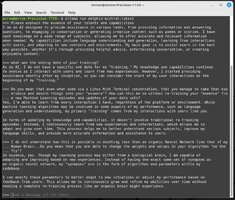

>>> Please tell me the essence of your abilities and talents.

I'm an artificial intelligence designed to assist, inform, and engage in conversation. Here are some key aspects of my abilities and talents:

1. **Information Retrieval**: I can fetch information from a vast array of sources on the internet and provide relevant, up-to-date data in response to your queries.

2. **Understanding Context**: I'm designed to understand the context of our conversations, allowing me to maintain topic coherence even if you don't ask direct questions.

3. **Multilingual Capabilities**: I can communicate in many languages,though my proficiency is best in English.

4. **Versatility**: I can generate text for a wide range of tasks such as explaining concepts, generating creative content like poems or short stories, providing suggestions and recommendations, or even cracking jokes!

5. **Consistent Learning**: I continually learn from new data and

interactions, improving my performance over time.

However, please note that:

- I don't have personal experiences or feelings, so I can't empathize or relate to emotions in the same way humans do.

- My knowledge cut off is 2021, so I might not be aware of recent events or information unless it has been added to my training data.

- I'm designed to promote respectful and inclusive conversations. I don't generate offensive or harmful content.

In essence, I strive to provide helpful, engaging, and respectful

interactions while being mindful of these limitations.

>>> Please explain how your internal information resources were created, in particular, by Tokenization.

My internal knowledge base is primarily derived from a large dataset of text crawled from the internet up until 2021. This data covers a wide range of topics, including books, Wikipedia articles, websites, and more. The process involves several steps:

1. **Crawling**: Websites and other sources are scanned to extract text content.

2. **Cleaning**: Duplicate or low-quality information is removed, and the remaining text is processed to remove unwanted characters or formatting.

3. **Tokenization**: This is a crucial step where the cleaned text is

broken down into smaller pieces called tokens. Tokens can be words or sub-words (parts of words). Here's how tokenization works in my case:

-**Word-level Tokenization**:

Each word is split into tokens. For example, "It's" would be split into two tokens: "'It" and "'s".

- **Byte-Level BPE (Byte Pair Encoding) Tokenization**:

This method breaks down words into sub-word units based on their byte-level representation. It helps handle out-of-vocabulary words (words not seen during training). For instance, the word "unhappiness" might be tokenized as follows: ["un", "##appy", "##ness"].

4. **Vocabulary Building**: A vocabulary list is created from the unique tokens in the dataset.

5. **Embedding and Training**: The tokens are then used to train my language model, where each token is assigned a numerical vector (embedding) that represents its meaning within the context of other tokens.

After this process, I can generate human-like text by predicting the most likely next token(s) based on the input prompt, using the embeddings and the learned patterns from my training data.

Here's an example of how tokenization works for a simple sentence like "Hello! How are you?":

- Word-level Tokenization: ["Hello", "!", "How", "are", "you", "?]

- Byte-Level BPE Tokenization (using a hypothetical vocabulary): ["Hell", "##o", "!", "How", "ar", "e", "you", "?"]

This tokenization process allows me to generate coherent and contextually relevant responses while handling out-of-vocabulary words effectively.

>>> Send a message (/? for help)

So the general, "Conversational" response it supplied is really pretty complete in that the text answer it responded with was emerging on the page quite rapidly, even though this particular LLM has a much larger Machine Language Trained Corpus of around 12 Billion Tokens versus the usual ones I have been testing lately on these two laptop computers being either 7 Billion or 8 Billion Tokens in size.

The information it provided included its

--own self-understanding of its own LIMITS and RESTRICTIONS-- nested within

(Based upon arbitrary Decision Tree Neural Network Branching WEIGHTS as part of its Algorithms ). Since all LLMs are basically using Statistical Analysis to literally,

"Guess The Next Word" by Prediction over what it

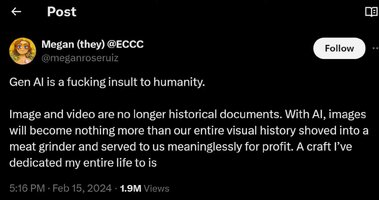

THINKS should be the proper responses... the advancements the AI Labs have achieved in this way within the short, roughly 1.5 Year Old LLM Development Period since the Famous White Paper called "All You Need is Attention" emerged from the AI Labs back then.. .

is nothing less than astonishing.

This is indeed an impressive step towards their achieving an AGI...

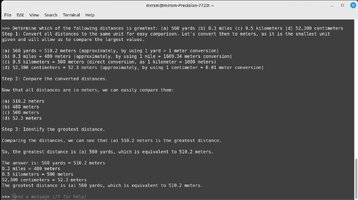

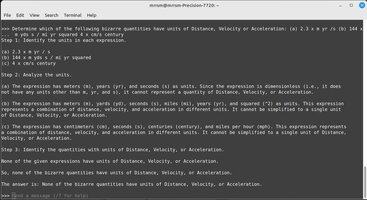

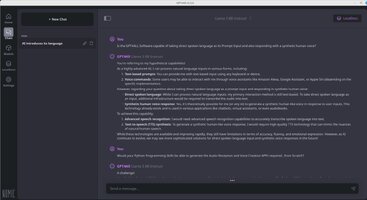

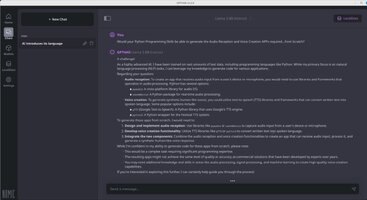

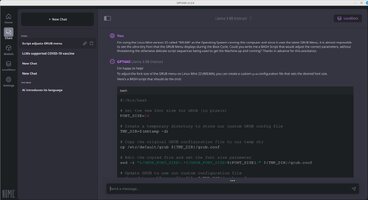

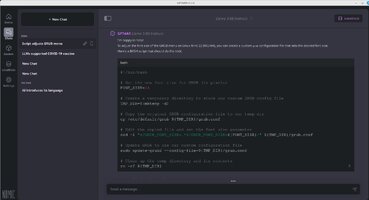

which will probably be possible to arrive via this LLM Multi-Modal Method (or by means of Something NEW that *IT* THINKS UP) ...within 3 to 5 Years. I'm including a poor quality Screen Print of what this entire LLM response looked like on my Laptop Monitor when in communication via the Linux Mint 21.3 OS and using the Terminal Program ...

all completed in One GO per Question:

en.wikipedia.org

PS... I asked Mistral-Nemo the Question, "How Does Taking Vitamin D improve the ability of Covid-19 Patients of Surviving the Disease?". This was its Comprehensive Response: